---

license: other

datasets:

- nsmc

language:

- ko

---

# Korean GPT Bot Sentiment Classification (ko-gpt-bot-sc)

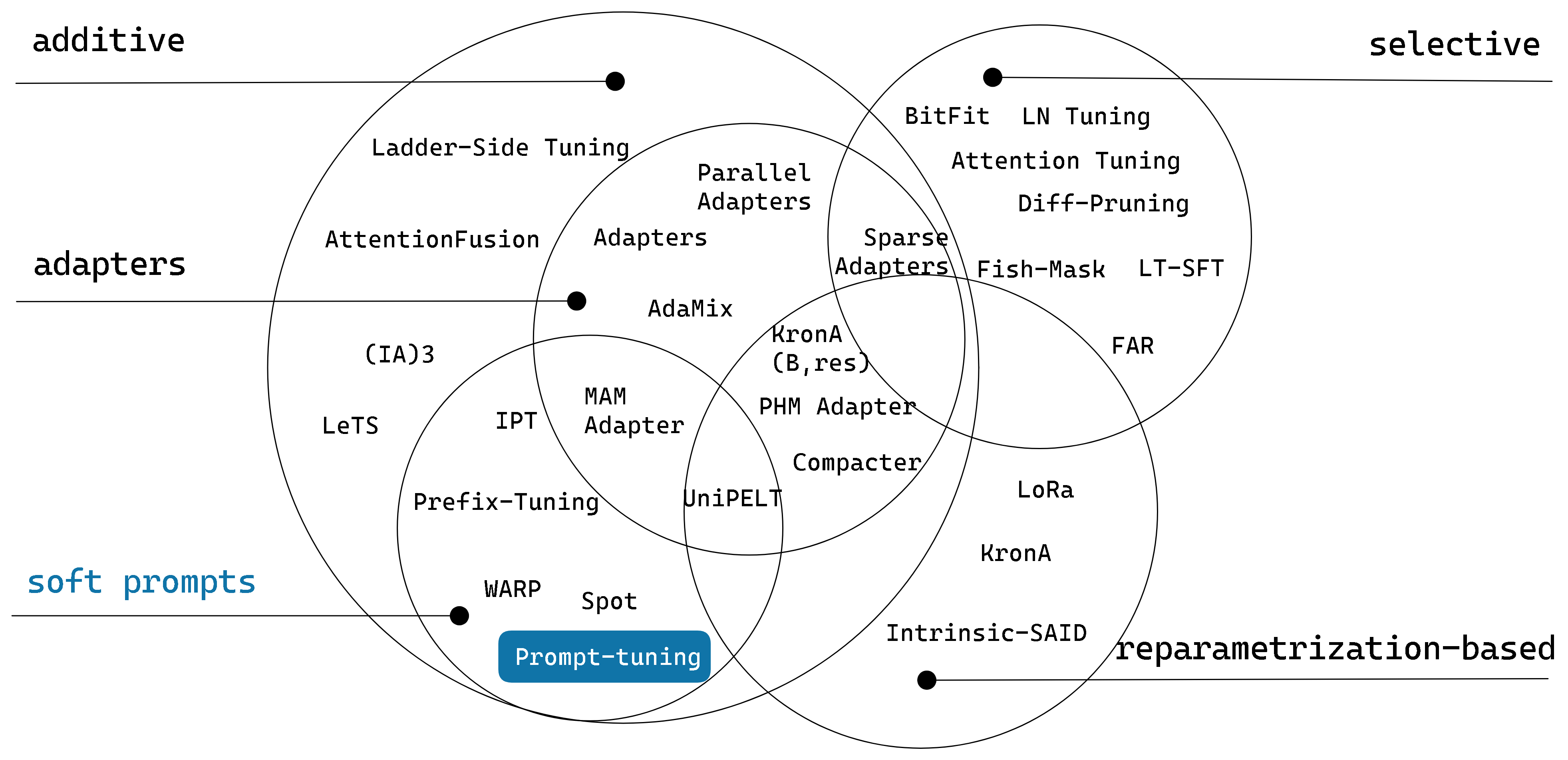

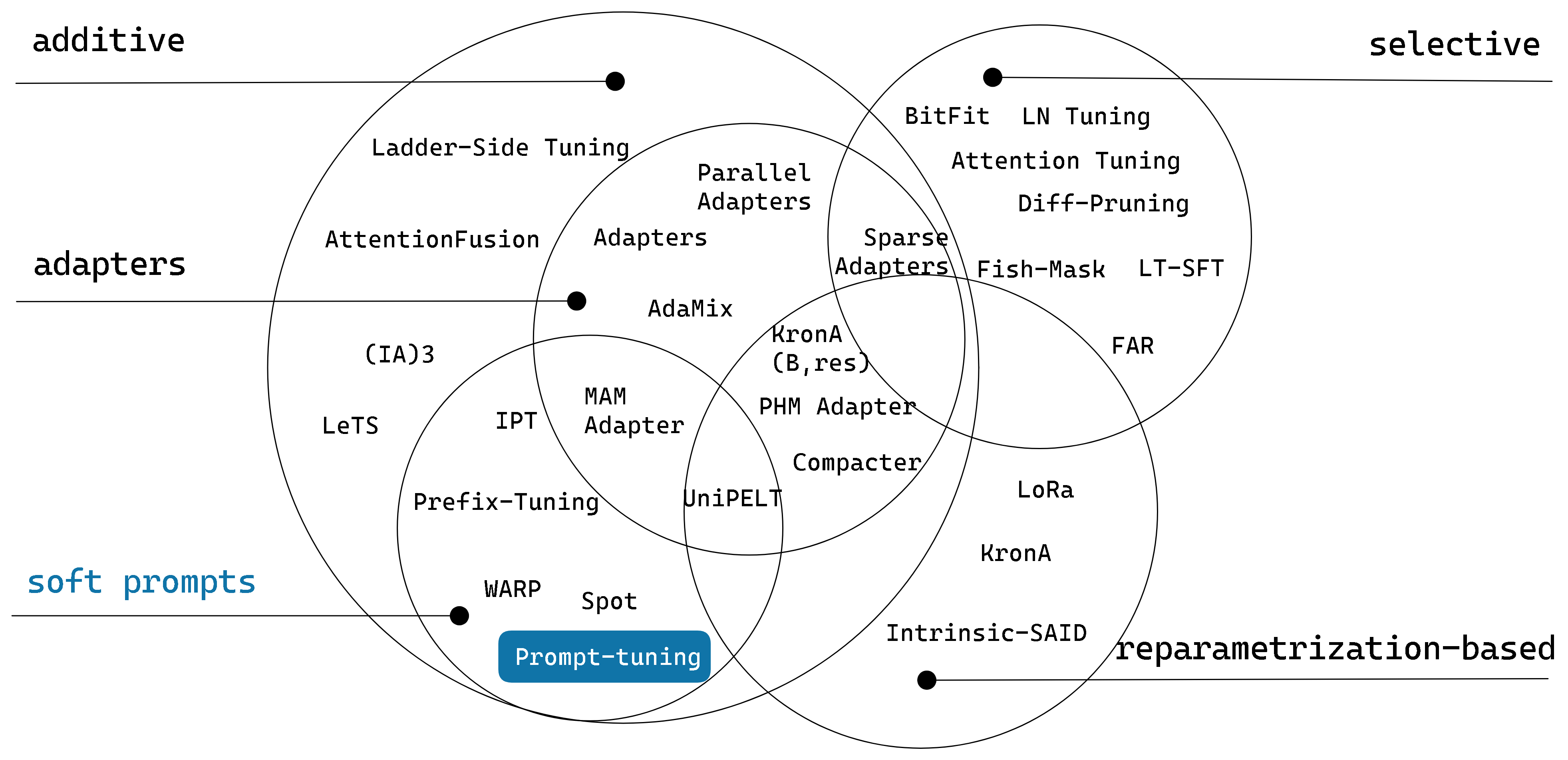

### Method

- Promt-Tuning/Prefix-tuning/Soft Embedding

- Parameters

| Parameters | No. |

|------------|---------------------|

| All | 6173039616 (100.0%) |

| Trainable | 6537216 (0.1%) |

| Freezed | 6166502400 (99.9%) |

### Model

```

LAYER NAME #PARAMS RATIO MEM(MB)

--model: 6,177,233,921 100.00% 23552.28

--learned_embedding: 6,537,216 0.11% 24.94

--transformer: 5,906,391,041 95.62% 22519.09

--wte

--weight: 264,241,152 4.28% 1008.00

--h: 5,642,141,697 91.34% 21511.06

--0: 205,549,569 3.33% 772.11

--ln_1: 8,192 0.00% 0.03

--attn: 71,303,169 1.15% 260.00

--mlp: 134,238,208 2.17% 512.08

--1(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--2(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--3(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--4(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--5(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--6(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--7(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--8(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--9(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--10(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--11(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--12(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--13(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--14(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--15(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--16(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--17(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--18(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--19(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--20(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--21(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--22(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--23(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--24(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--25(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--26(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--27(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--ln_f: 8,192 0.00% 0.03

--weight: 4,096 0.00% 0.02

--bias: 4,096 0.00% 0.02

--lm_head: 264,305,664 4.28% 1008.25

--weight: 264,241,152 4.28% 1008.00

--bias: 64,512 0.00% 0.25

```

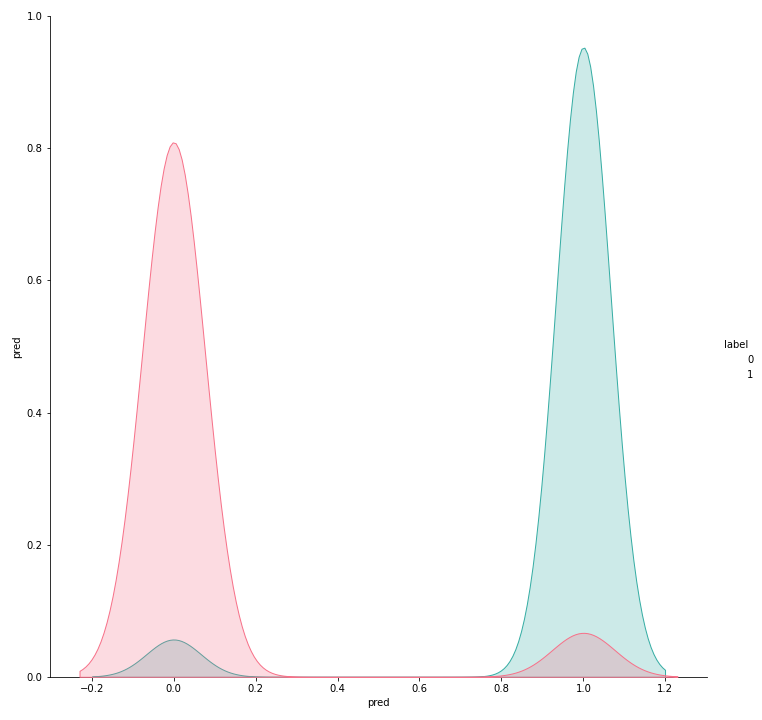

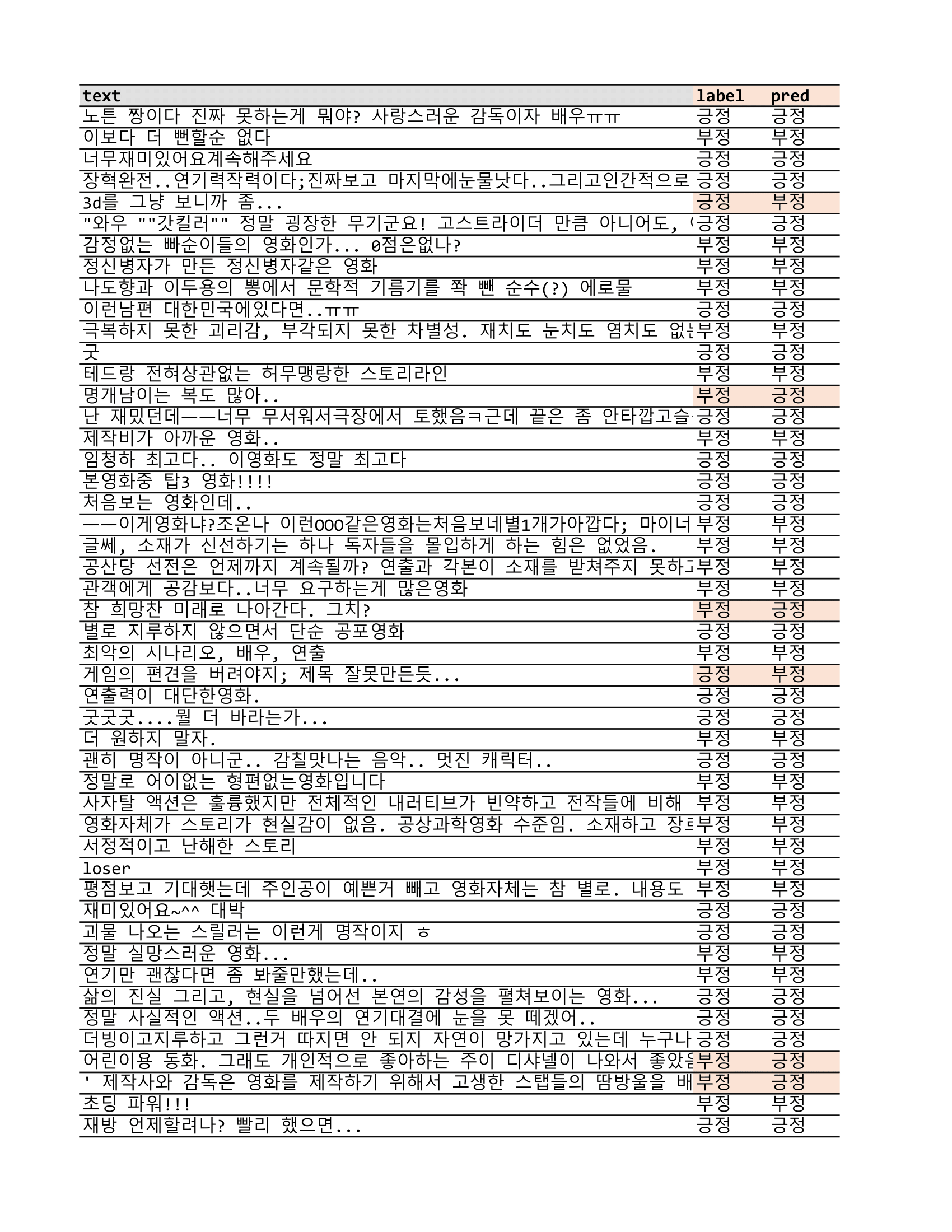

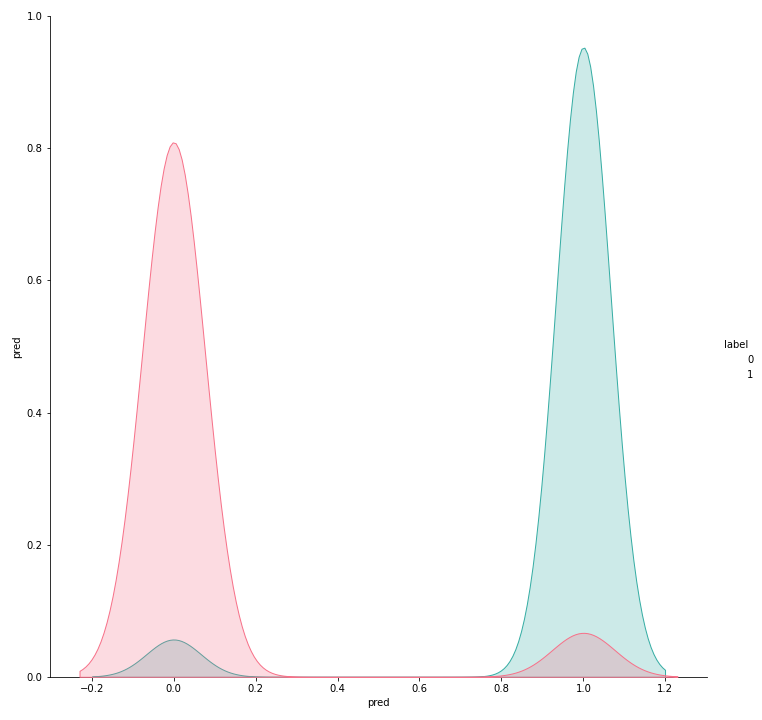

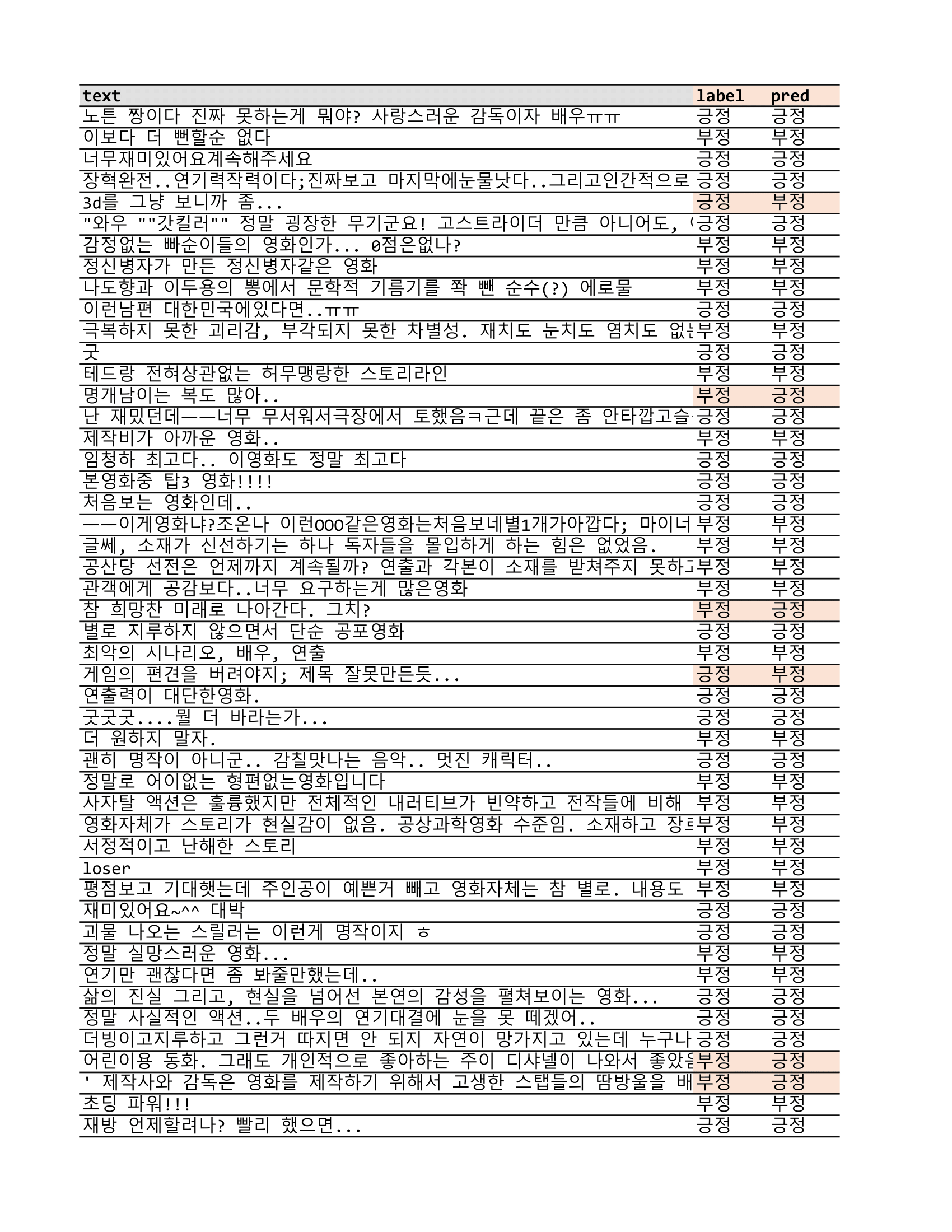

### Metrics

| Metric | Value |

|--------|--------|

| step | 520 |

| loss | 3.1814 |

| | precision | recall | f1-score | support |

|--------------|-----------|--------|----------|---------|

| 긍정 | 0.92549 | 0.944 | 0.934653 | 500 |

| 부정 | 0.942857 | 0.924 | 0.933333 | 500 |

| accuracy | 0.934 | 0.934 | 0.934 | 0.934 |

| macro avg | 0.934174 | 0.934 | 0.933993 | 1000 |

| weighted avg | 0.934174 | 0.934 | 0.933993 | 1000 |

### Model

```

LAYER NAME #PARAMS RATIO MEM(MB)

--model: 6,177,233,921 100.00% 23552.28

--learned_embedding: 6,537,216 0.11% 24.94

--transformer: 5,906,391,041 95.62% 22519.09

--wte

--weight: 264,241,152 4.28% 1008.00

--h: 5,642,141,697 91.34% 21511.06

--0: 205,549,569 3.33% 772.11

--ln_1: 8,192 0.00% 0.03

--attn: 71,303,169 1.15% 260.00

--mlp: 134,238,208 2.17% 512.08

--1(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--2(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--3(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--4(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--5(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--6(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--7(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--8(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--9(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--10(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--11(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--12(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--13(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--14(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--15(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--16(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--17(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--18(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--19(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--20(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--21(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--22(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--23(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--24(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--25(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--26(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--27(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--ln_f: 8,192 0.00% 0.03

--weight: 4,096 0.00% 0.02

--bias: 4,096 0.00% 0.02

--lm_head: 264,305,664 4.28% 1008.25

--weight: 264,241,152 4.28% 1008.00

--bias: 64,512 0.00% 0.25

```

### Metrics

| Metric | Value |

|--------|--------|

| step | 520 |

| loss | 3.1814 |

| | precision | recall | f1-score | support |

|--------------|-----------|--------|----------|---------|

| 긍정 | 0.92549 | 0.944 | 0.934653 | 500 |

| 부정 | 0.942857 | 0.924 | 0.933333 | 500 |

| accuracy | 0.934 | 0.934 | 0.934 | 0.934 |

| macro avg | 0.934174 | 0.934 | 0.933993 | 1000 |

| weighted avg | 0.934174 | 0.934 | 0.933993 | 1000 |

### References

- Prompt Tuning: **The Power of Scale for Parameter-Efficient Prompt Tuning**

- Prompt Tuning v2: **P-Tuning v2: Prompt Tuning Can Be Comparable to Fine-tuning Universally Across Scales and Tasks**

### References

- Prompt Tuning: **The Power of Scale for Parameter-Efficient Prompt Tuning**

- Prompt Tuning v2: **P-Tuning v2: Prompt Tuning Can Be Comparable to Fine-tuning Universally Across Scales and Tasks** ### Model

```

LAYER NAME #PARAMS RATIO MEM(MB)

--model: 6,177,233,921 100.00% 23552.28

--learned_embedding: 6,537,216 0.11% 24.94

--transformer: 5,906,391,041 95.62% 22519.09

--wte

--weight: 264,241,152 4.28% 1008.00

--h: 5,642,141,697 91.34% 21511.06

--0: 205,549,569 3.33% 772.11

--ln_1: 8,192 0.00% 0.03

--attn: 71,303,169 1.15% 260.00

--mlp: 134,238,208 2.17% 512.08

--1(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--2(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--3(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--4(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--5(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--6(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--7(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--8(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--9(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--10(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--11(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--12(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--13(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--14(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--15(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--16(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--17(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--18(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--19(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--20(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--21(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--22(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--23(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--24(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--25(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--26(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--27(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--ln_f: 8,192 0.00% 0.03

--weight: 4,096 0.00% 0.02

--bias: 4,096 0.00% 0.02

--lm_head: 264,305,664 4.28% 1008.25

--weight: 264,241,152 4.28% 1008.00

--bias: 64,512 0.00% 0.25

```

### Metrics

| Metric | Value |

|--------|--------|

| step | 520 |

| loss | 3.1814 |

| | precision | recall | f1-score | support |

|--------------|-----------|--------|----------|---------|

| 긍정 | 0.92549 | 0.944 | 0.934653 | 500 |

| 부정 | 0.942857 | 0.924 | 0.933333 | 500 |

| accuracy | 0.934 | 0.934 | 0.934 | 0.934 |

| macro avg | 0.934174 | 0.934 | 0.933993 | 1000 |

| weighted avg | 0.934174 | 0.934 | 0.933993 | 1000 |

### Model

```

LAYER NAME #PARAMS RATIO MEM(MB)

--model: 6,177,233,921 100.00% 23552.28

--learned_embedding: 6,537,216 0.11% 24.94

--transformer: 5,906,391,041 95.62% 22519.09

--wte

--weight: 264,241,152 4.28% 1008.00

--h: 5,642,141,697 91.34% 21511.06

--0: 205,549,569 3.33% 772.11

--ln_1: 8,192 0.00% 0.03

--attn: 71,303,169 1.15% 260.00

--mlp: 134,238,208 2.17% 512.08

--1(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--2(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--3(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--4(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--5(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--6(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--7(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--8(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--9(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--10(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--11(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--12(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--13(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--14(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--15(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--16(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--17(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--18(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--19(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--20(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--21(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--22(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--23(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--24(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--25(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--26(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--27(partially shared): 201,355,264 3.26% 768.11

--ln_1: 8,192 0.00% 0.03

--attn(shared): 67,108,864 1.09% 256.00

--mlp: 134,238,208 2.17% 512.08

--ln_f: 8,192 0.00% 0.03

--weight: 4,096 0.00% 0.02

--bias: 4,096 0.00% 0.02

--lm_head: 264,305,664 4.28% 1008.25

--weight: 264,241,152 4.28% 1008.00

--bias: 64,512 0.00% 0.25

```

### Metrics

| Metric | Value |

|--------|--------|

| step | 520 |

| loss | 3.1814 |

| | precision | recall | f1-score | support |

|--------------|-----------|--------|----------|---------|

| 긍정 | 0.92549 | 0.944 | 0.934653 | 500 |

| 부정 | 0.942857 | 0.924 | 0.933333 | 500 |

| accuracy | 0.934 | 0.934 | 0.934 | 0.934 |

| macro avg | 0.934174 | 0.934 | 0.933993 | 1000 |

| weighted avg | 0.934174 | 0.934 | 0.933993 | 1000 |

### References

- Prompt Tuning: **The Power of Scale for Parameter-Efficient Prompt Tuning**

- Prompt Tuning v2: **P-Tuning v2: Prompt Tuning Can Be Comparable to Fine-tuning Universally Across Scales and Tasks**

### References

- Prompt Tuning: **The Power of Scale for Parameter-Efficient Prompt Tuning**

- Prompt Tuning v2: **P-Tuning v2: Prompt Tuning Can Be Comparable to Fine-tuning Universally Across Scales and Tasks**