Scene Reference

Fireworks explode over the pyramids.

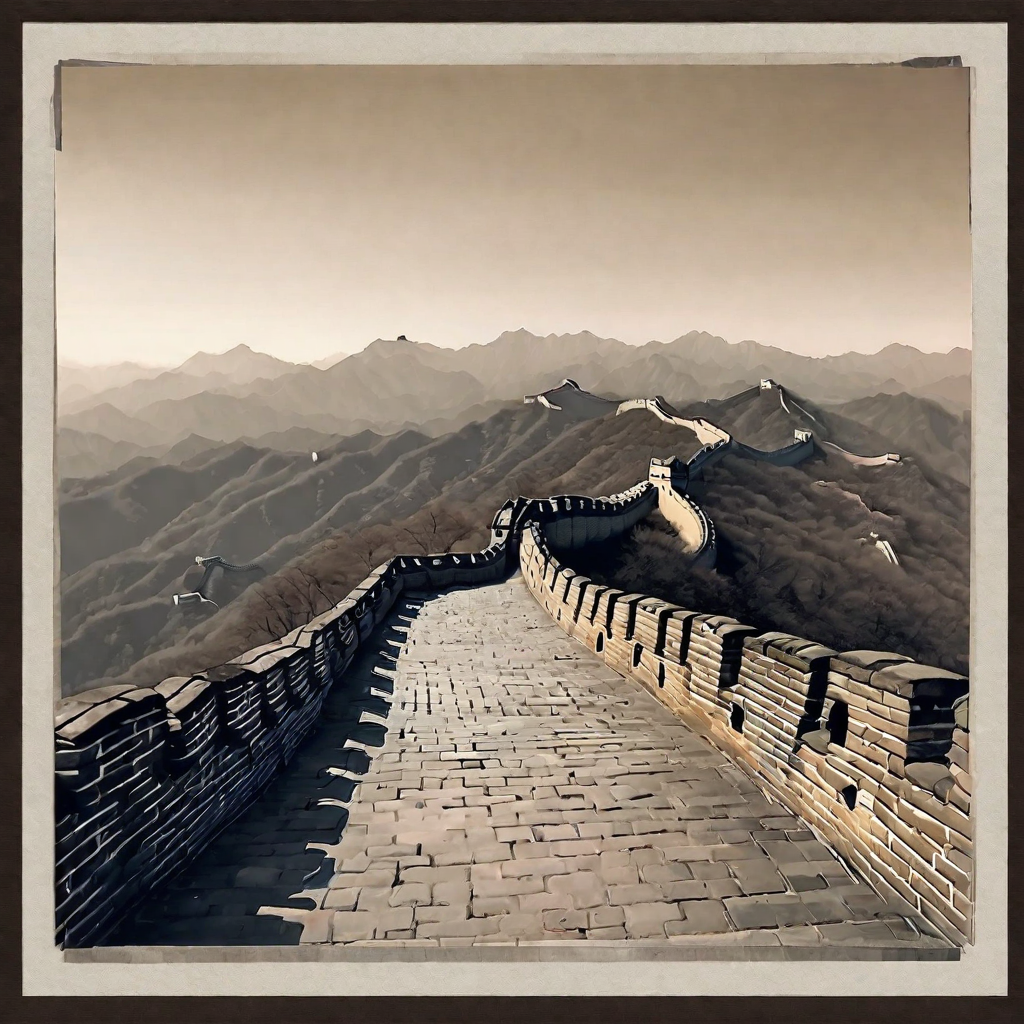

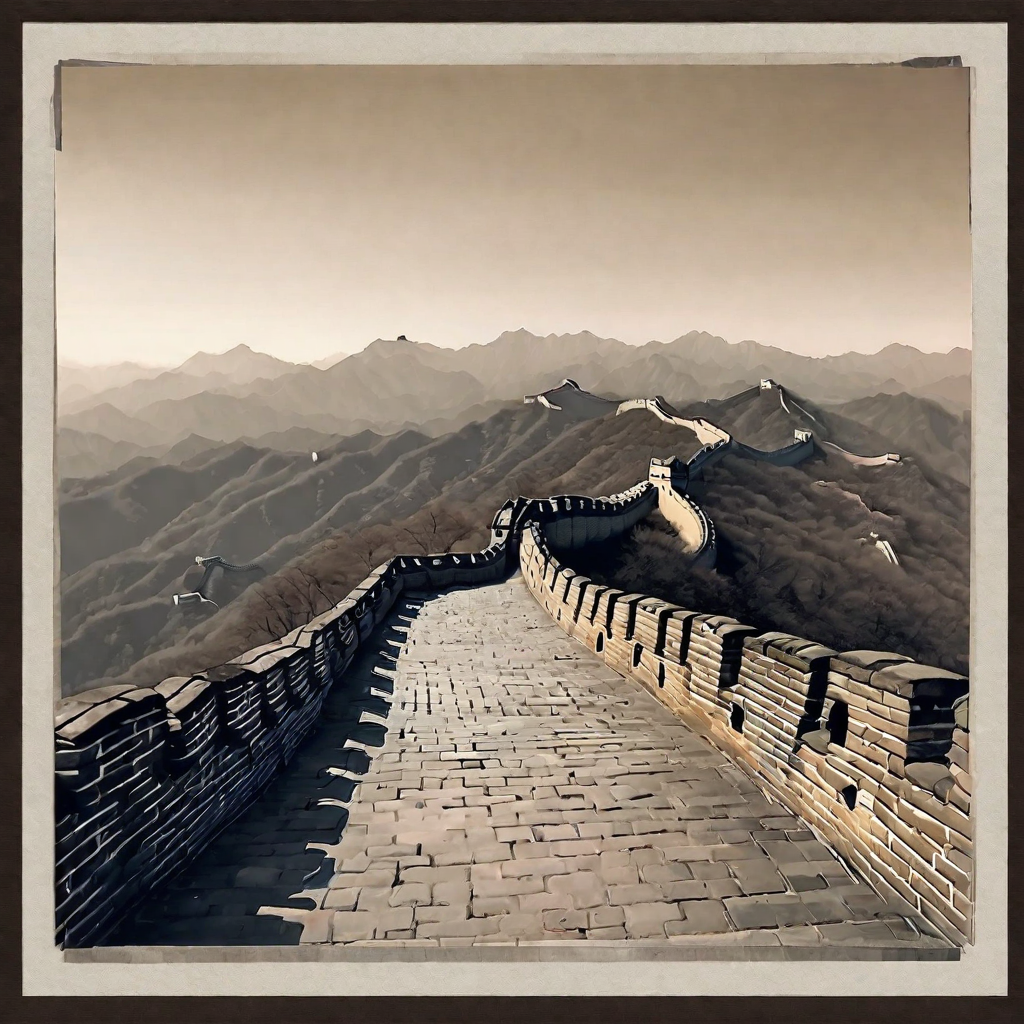

Scene Reference

The Great Wall burning with raging fire.

Object Reference

A cat is running on the beach.

| Reference Image | Output Video |

Scene Reference |

Fireworks explode over the pyramids. |

Scene Reference |

The Great Wall burning with raging fire. |

Object Reference |

A cat is running on the beach. |

| Input Image | Output Video |

|

Underwater environment cosmetic bottles. |

|

A big drop of water falls on a rose petal. |

|

A fish swims past an oriental woman. |

|

Cinematic photograph. View of piloting aaero. |

|

Planet hits earth. |

| Output Video | |

A deer looks at the sunset behind him. |

A duck is teaching math to another duck. |

Bezos explores tropical rainforest. |

Light blue water lapping on the beach. |