---

tags:

- ctranslate2

---

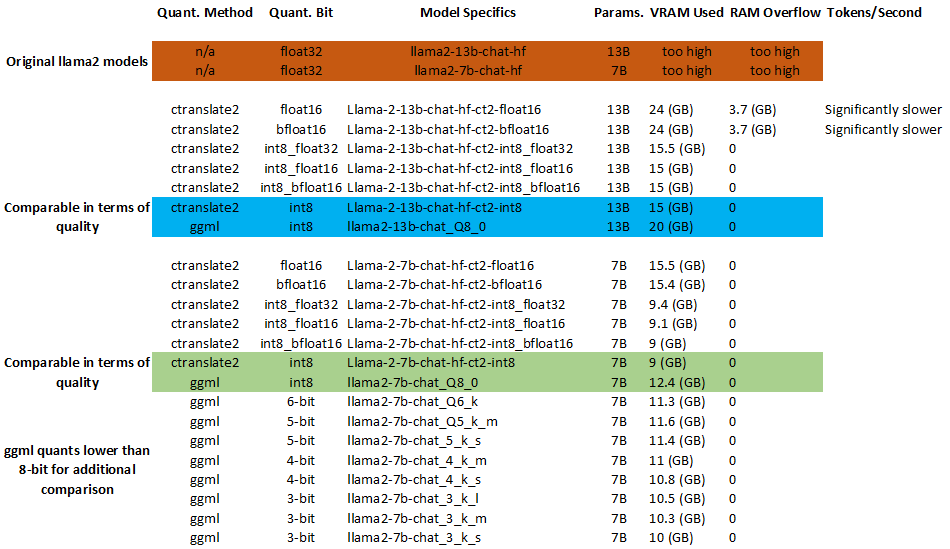

"Ctranslate2" is an amazing library that runs these models. They are faster, more accurate, and use less VRAM/RAM than GGML and GPTQ models.

How to run with instructions: https://github.com/BBC-Esq

- COMING SOON

Learn more about the amazing "ctranslate2" technology:"

- https://github.com/OpenNMT/CTranslate2

- https://opennmt.net/CTranslate2/index.html

Compatibility and Data Formats

| Format | Approximate Size Compared to `float32` | Nvidia GPU Required "Compute" | Accuracy Summary |

|-----------------|----------------------------|-----------------|--------------------------|

| `float32` | 100% | 1.0 | Offers more precision and a wider range. Most un-quantized models use this. |

| `int16` | 51.37% | 1.0 | Same as `int8` but with a larger range. |

| `float16` | 50.00% | 5.3 (e.g. Nvidia 10 Series and Higher) | Suitable for scientific computations; balance between precision and memory. |

| `bfloat16` | 50.00% | 8.0 (e.g. Nvidia 30 Series and Higher) | Often used in neural network training; larger exponent range than `float16`. |

| `int8_float32` | 27.47% | test manually (see below) | Combines low precision integer with high precision float. Useful for mixed data. |

| `int8_float16` | 26.10% | test manually (see below) | Combines low precision integer with medium precision float. Saves memory. |

| `int8_bfloat16` | 26.10% | test manually (see below) | Combines low precision integer with reduced precision float. Efficient for neural nets. |

| `int8` | 25% | 1.0 | Lower precision, suitable for whole numbers within a specific range. Often used where memory is crucial. |

| Web Link | Description |

|-------------------------------------------------------------------------------|---------------------------------------------------------------------------------------------------------------------|

| [CUDA GPUs Supported](https://en.wikipedia.org/wiki/CUDA#GPUs_supported) | See what level of "compute" your Nvidia GPU supports. |

| [CTranslate2 Quantization](https://opennmt.net/CTranslate2/quantization.html#implicit-type-conversion-on-load) | Even if your GPU/CPU doesn't support the data type of the model you download, "ctranslate2" will automatically run the model in a way that's compatible. |

| [Bfloat16 Floating-Point Format](https://en.wikipedia.org/wiki/Bfloat16_floating-point_format#bfloat16_floating-point_format) | Visualize data formats. |

| [Nvidia Floating-Point](https://docs.nvidia.com/cuda/floating-point/index.html) | Technical discussion. |

Check Compatibility Manually

Open a command prompt and run the following commands (may require CUDA toolkit and cuDNN installed as well, need to doublecheck this):

```bash

pip install ctranslate2

```

```bash

python

```

```python

import ctranslate2

```

Check GPU/CUDA compatibility:

```python

ctranslate2.get_supported_compute_types("cuda")

```

Check CPU compatibility:

```python

ctranslate2.get_supported_compute_types("cpu")

```

It will print out your CPU/GPU compatibility. For example, a system with a 4090 GPU and 13900k would have the following compatibility:

| | **CPU** | **GPU** |

|-----------------|---------|---------|

| **`float32`** | ✅ | ✅ |

| **`int16`** | ✅ | |

| **`float16`** | | ✅ |

| **`bfloat16`** | | ✅ |

| **`int8_float32`** | ✅ | ✅ |

| **`int8_float16`** | | ✅ |

| **`int8_bfloat16`** | | ✅ |

| **`int8`** | ✅ | ✅ |