Upload folder using huggingface_hub

#2

by

begumcig

- opened

- base_results.json +19 -0

- plots.png +0 -0

- smashed_results.json +19 -0

base_results.json

ADDED

|

@@ -0,0 +1,19 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"current_gpu_type": "Tesla T4",

|

| 3 |

+

"current_gpu_total_memory": 15095.0625,

|

| 4 |

+

"perplexity": 3.4586403369903564,

|

| 5 |

+

"memory_inference_first": 808.0,

|

| 6 |

+

"memory_inference": 808.0,

|

| 7 |

+

"token_generation_latency_sync": 37.12965393066406,

|

| 8 |

+

"token_generation_latency_async": 37.13082652539015,

|

| 9 |

+

"token_generation_throughput_sync": 0.0269326507019807,

|

| 10 |

+

"token_generation_throughput_async": 0.02693180016653272,

|

| 11 |

+

"token_generation_CO2_emissions": 1.8527108646322842e-05,

|

| 12 |

+

"token_generation_energy_consumption": 0.001813133805152576,

|

| 13 |

+

"inference_latency_sync": 118.66042785644531,

|

| 14 |

+

"inference_latency_async": 46.790528297424316,

|

| 15 |

+

"inference_throughput_sync": 0.008427409356805911,

|

| 16 |

+

"inference_throughput_async": 0.021371846747347947,

|

| 17 |

+

"inference_CO2_emissions": 1.8868051579151425e-05,

|

| 18 |

+

"inference_energy_consumption": 6.49589917025334e-05

|

| 19 |

+

}

|

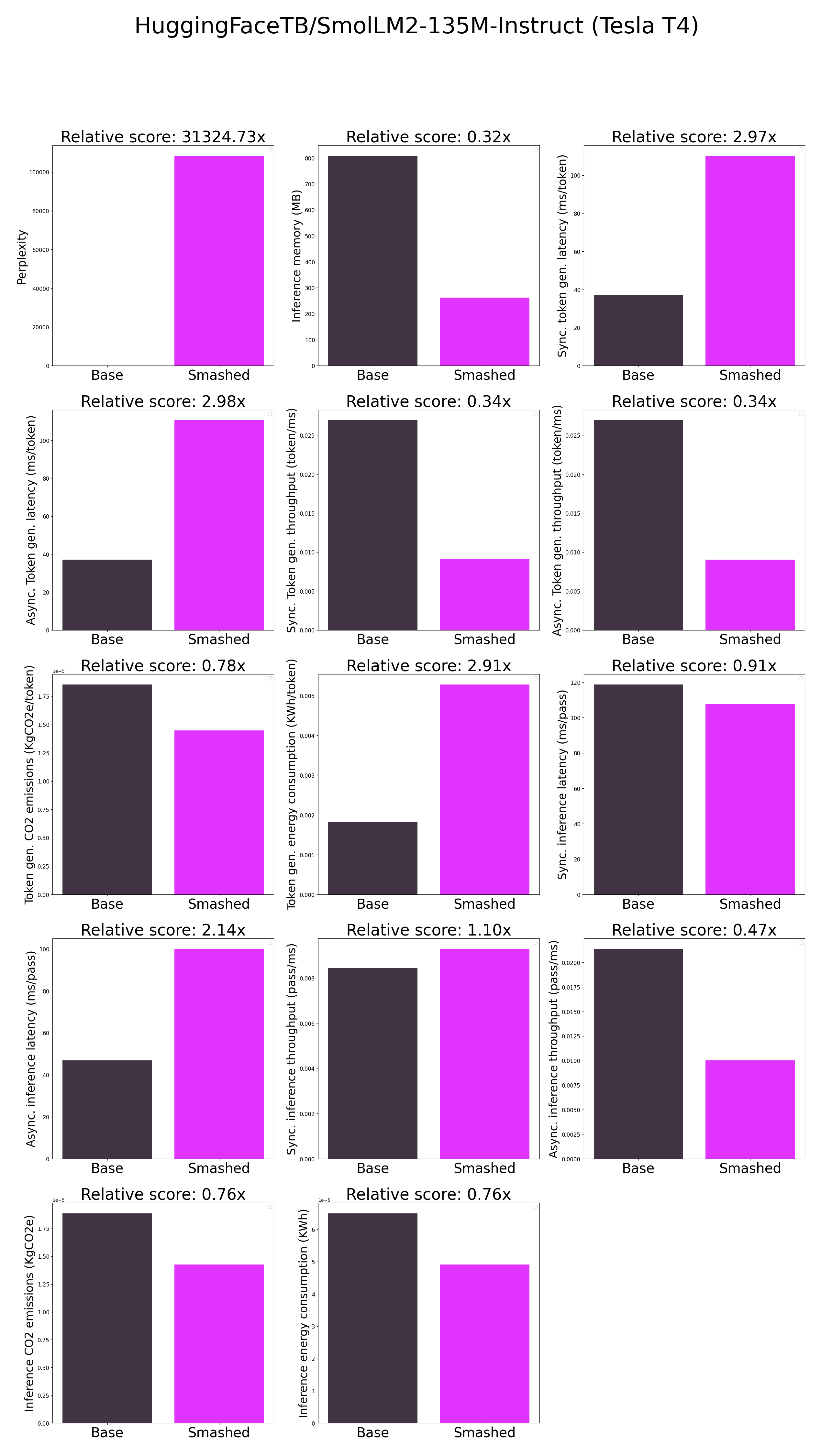

plots.png

ADDED

|

smashed_results.json

ADDED

|

@@ -0,0 +1,19 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"current_gpu_type": "Tesla T4",

|

| 3 |

+

"current_gpu_total_memory": 15095.0625,

|

| 4 |

+

"perplexity": 108340.9609375,

|

| 5 |

+

"memory_inference_first": 262.0,

|

| 6 |

+

"memory_inference": 262.0,

|

| 7 |

+

"token_generation_latency_sync": 110.18281021118165,

|

| 8 |

+

"token_generation_latency_async": 110.60973107814789,

|

| 9 |

+

"token_generation_throughput_sync": 0.009075825875954263,

|

| 10 |

+

"token_generation_throughput_async": 0.009040795870785373,

|

| 11 |

+

"token_generation_CO2_emissions": 1.4485038513711413e-05,

|

| 12 |

+

"token_generation_energy_consumption": 0.005279731847863916,

|

| 13 |

+

"inference_latency_sync": 107.71225280761719,

|

| 14 |

+

"inference_latency_async": 99.97386932373047,

|

| 15 |

+

"inference_throughput_sync": 0.009283994846770878,

|

| 16 |

+

"inference_throughput_async": 0.010002613750617666,

|

| 17 |

+

"inference_CO2_emissions": 1.4262623257981447e-05,

|

| 18 |

+

"inference_energy_consumption": 4.912709554389577e-05

|

| 19 |

+

}

|