---

language:

- en

thumbnail: ""

tags:

- painting

- anime

- stable-diffusion

- aiart

- text-to-image

license: creativeml-openrail-m

---

# DGSpitzer Art Diffusion

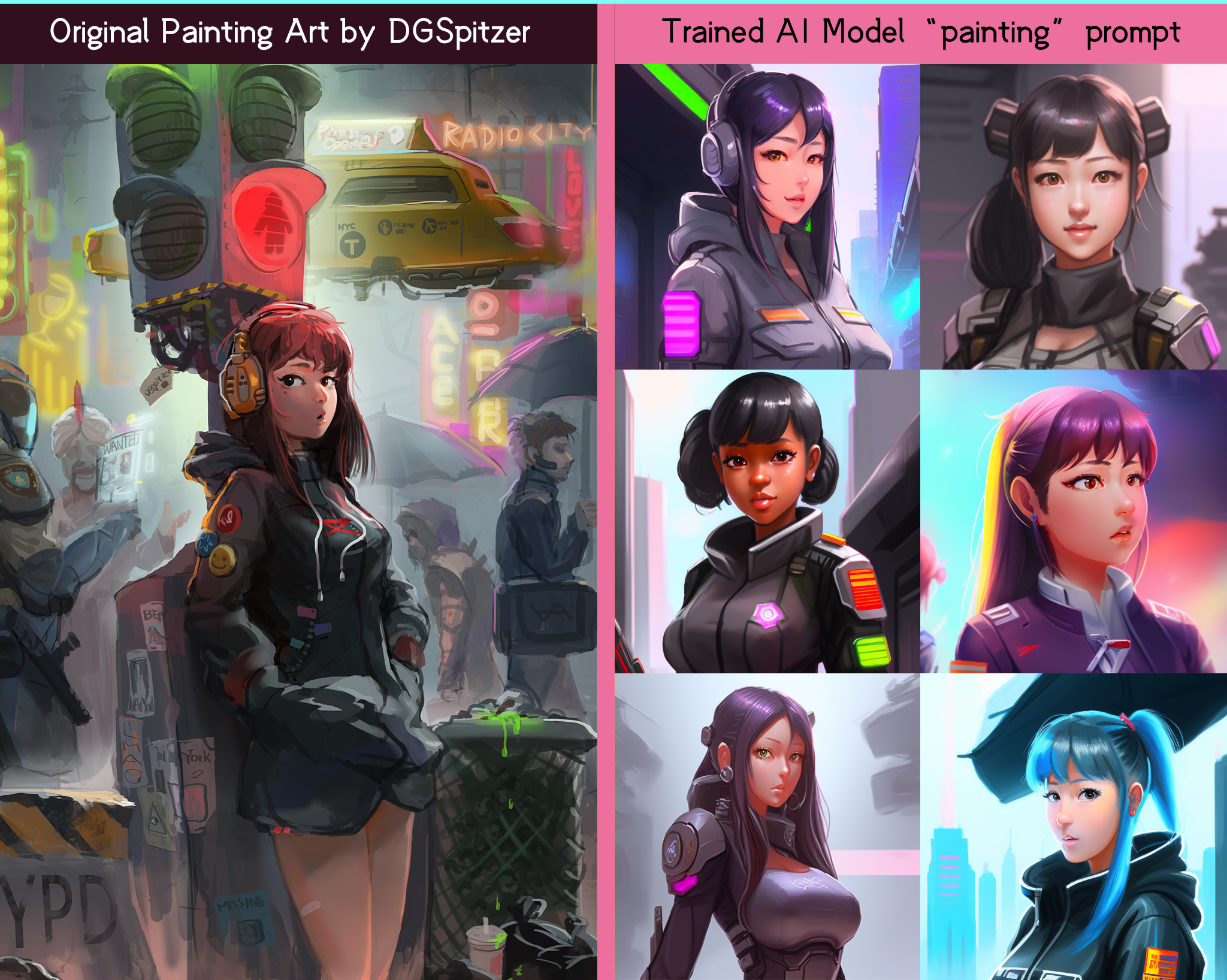

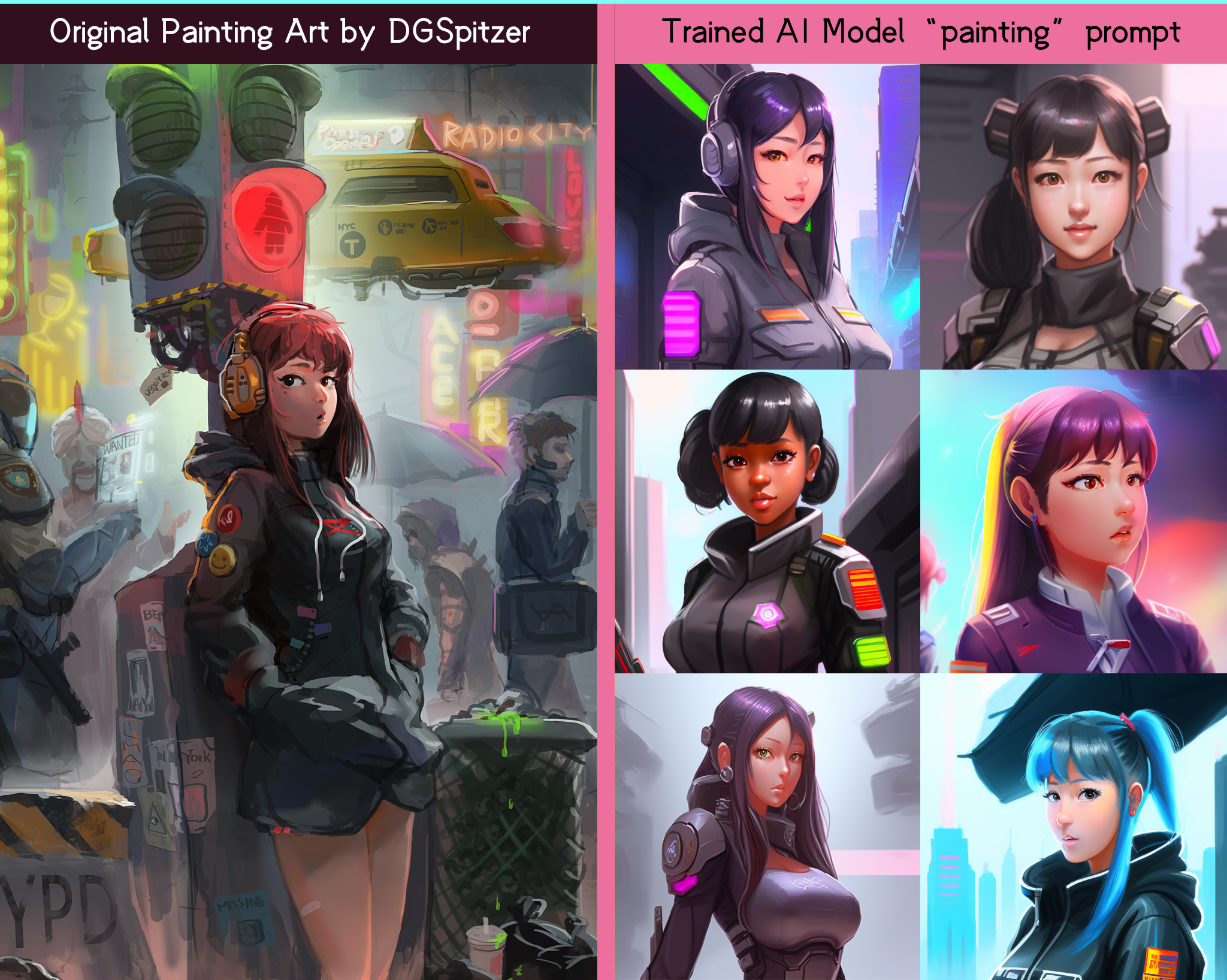

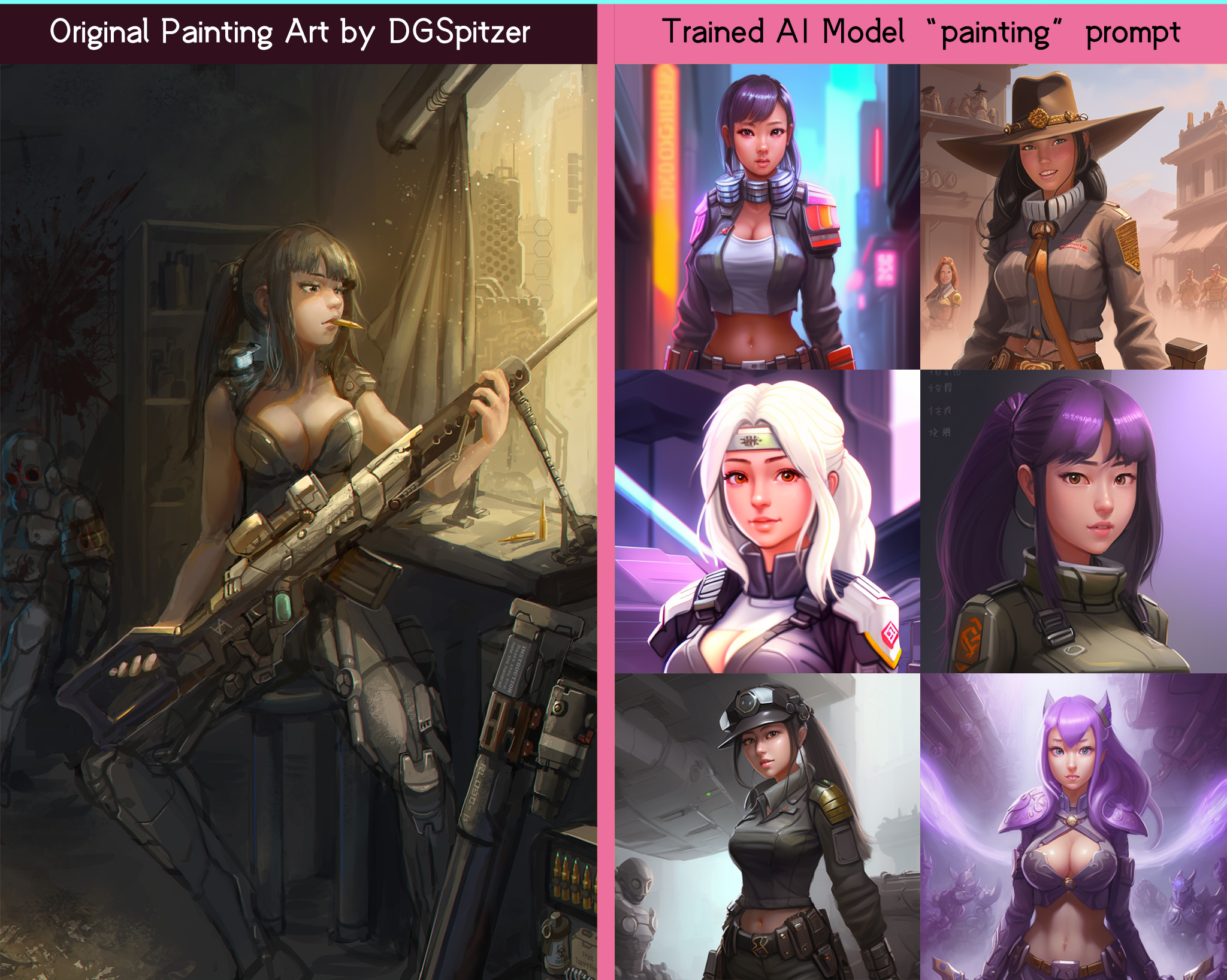

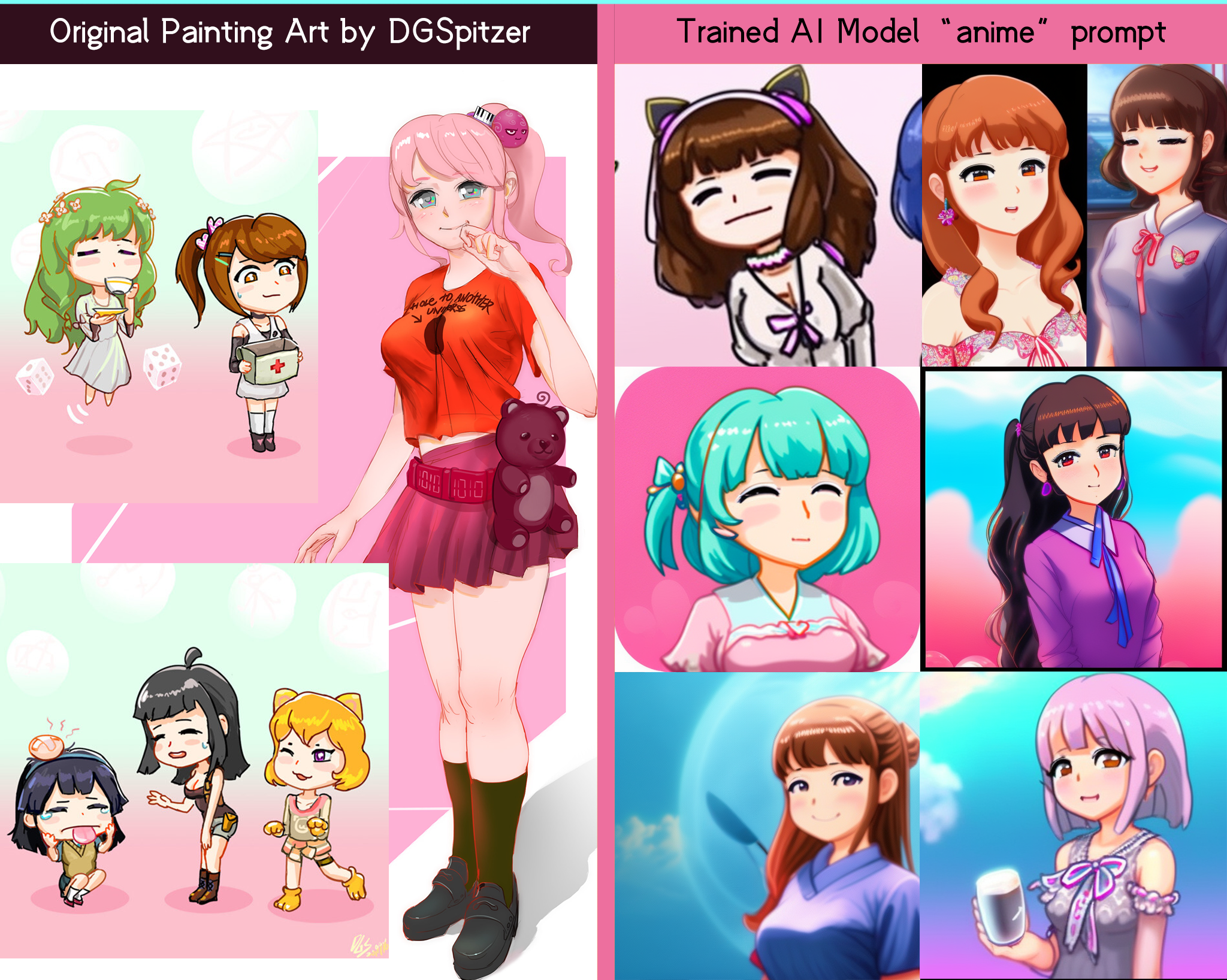

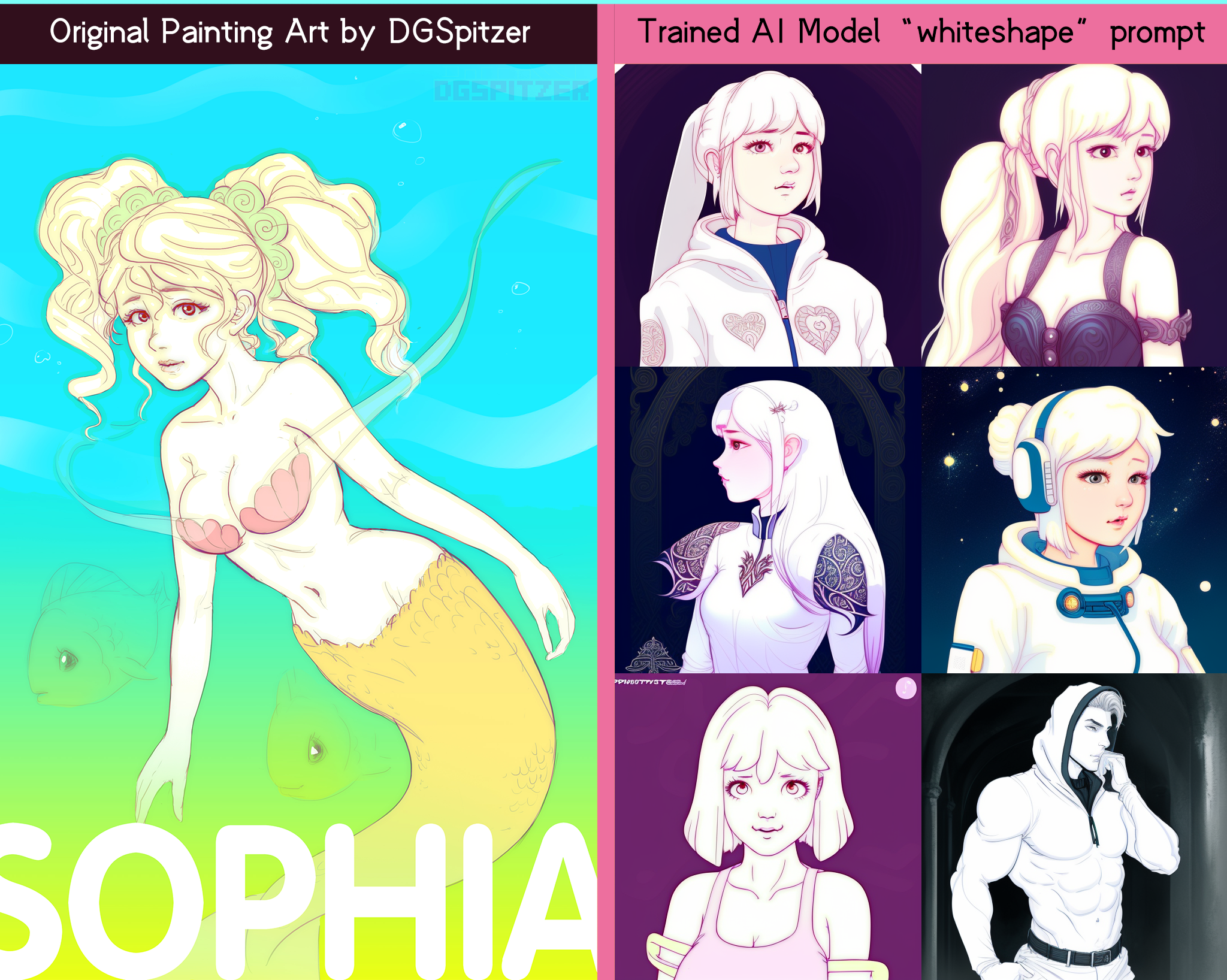

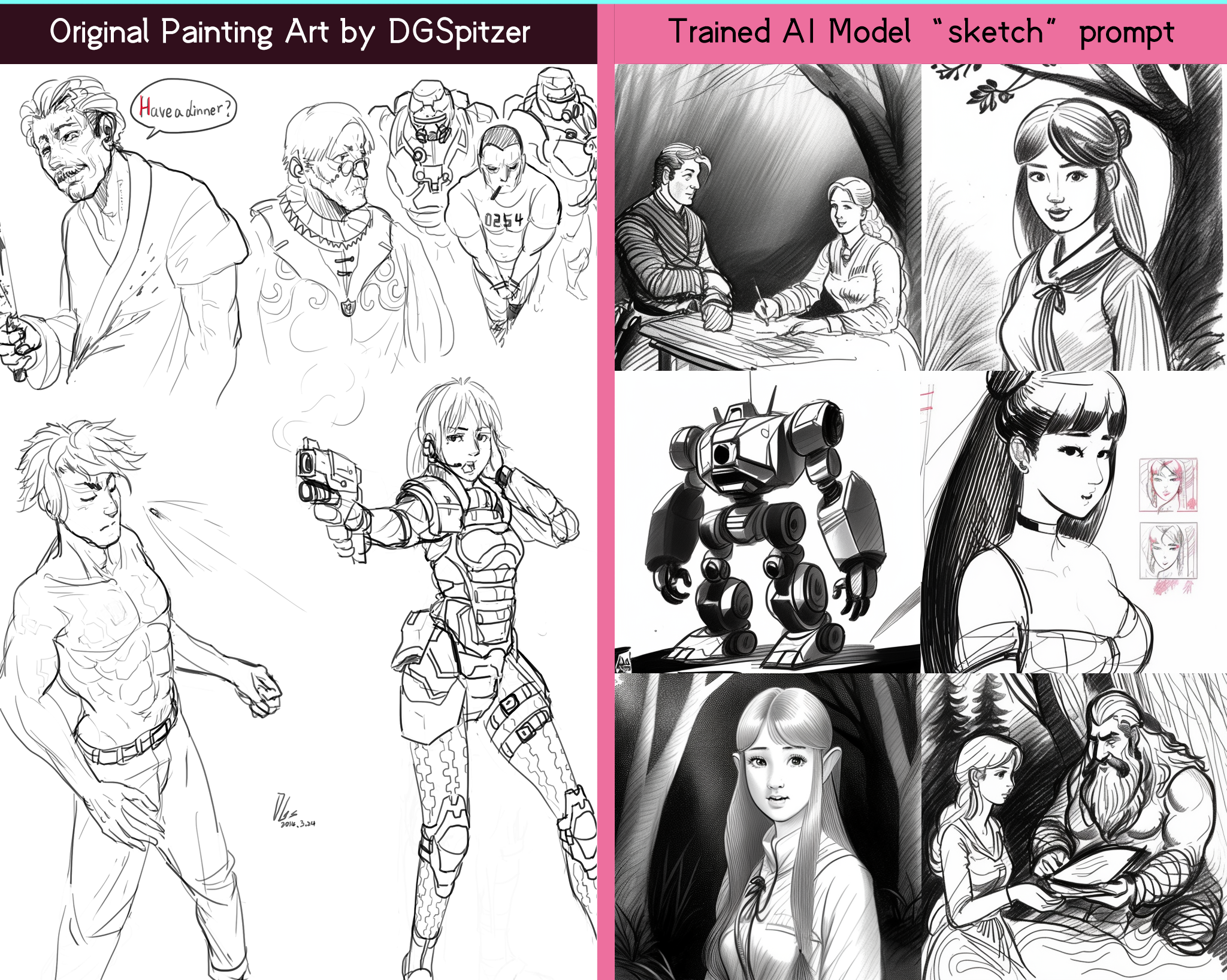

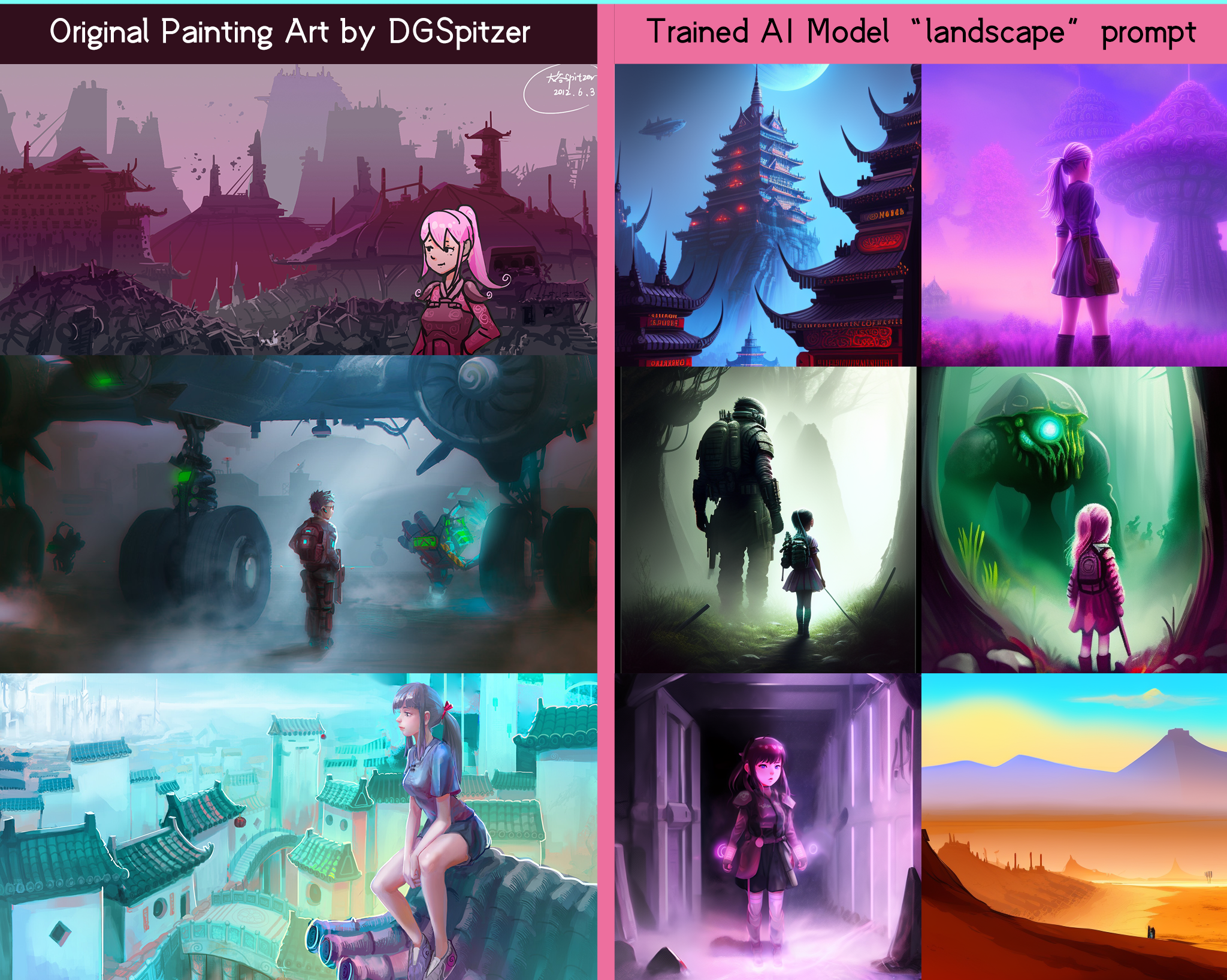

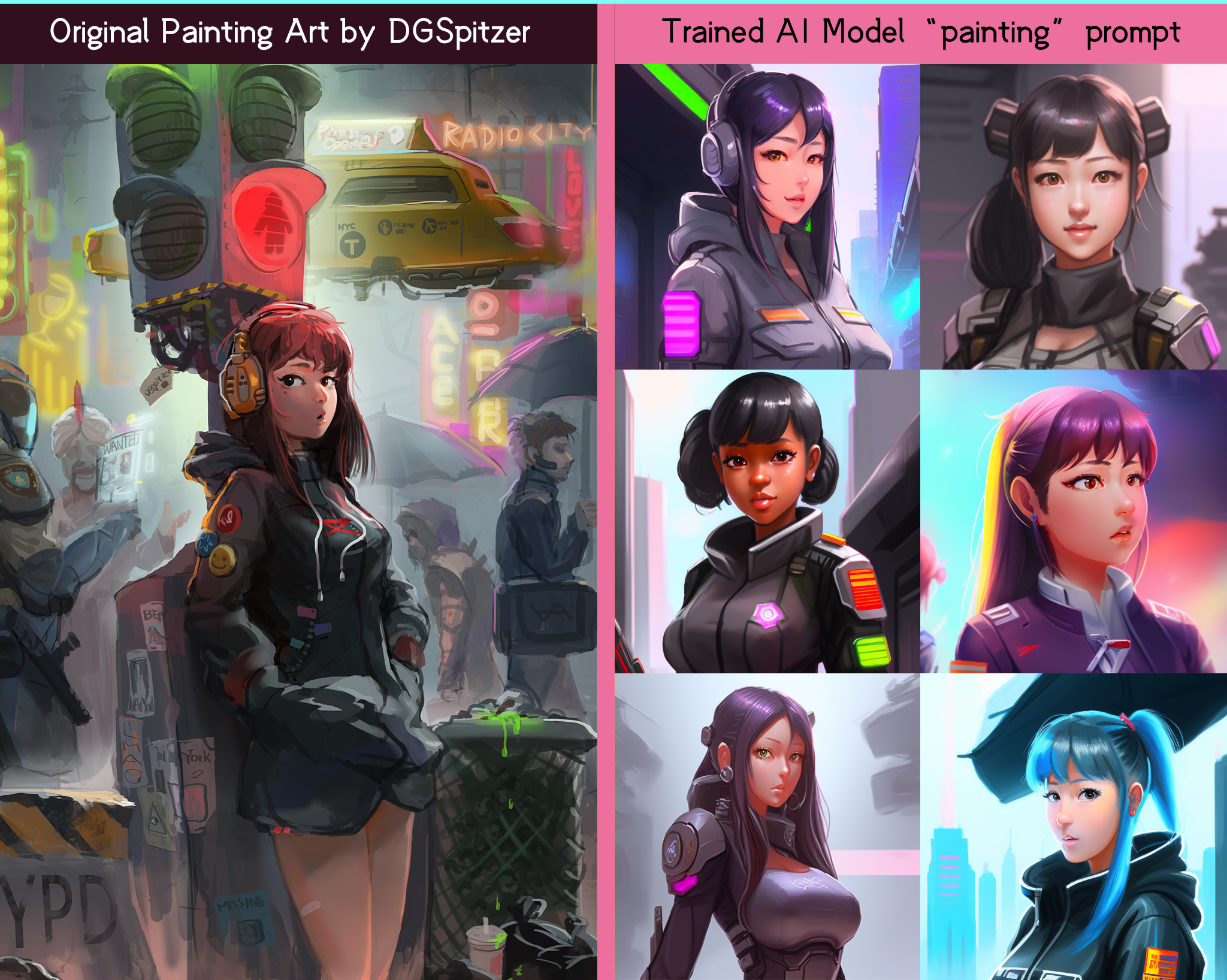

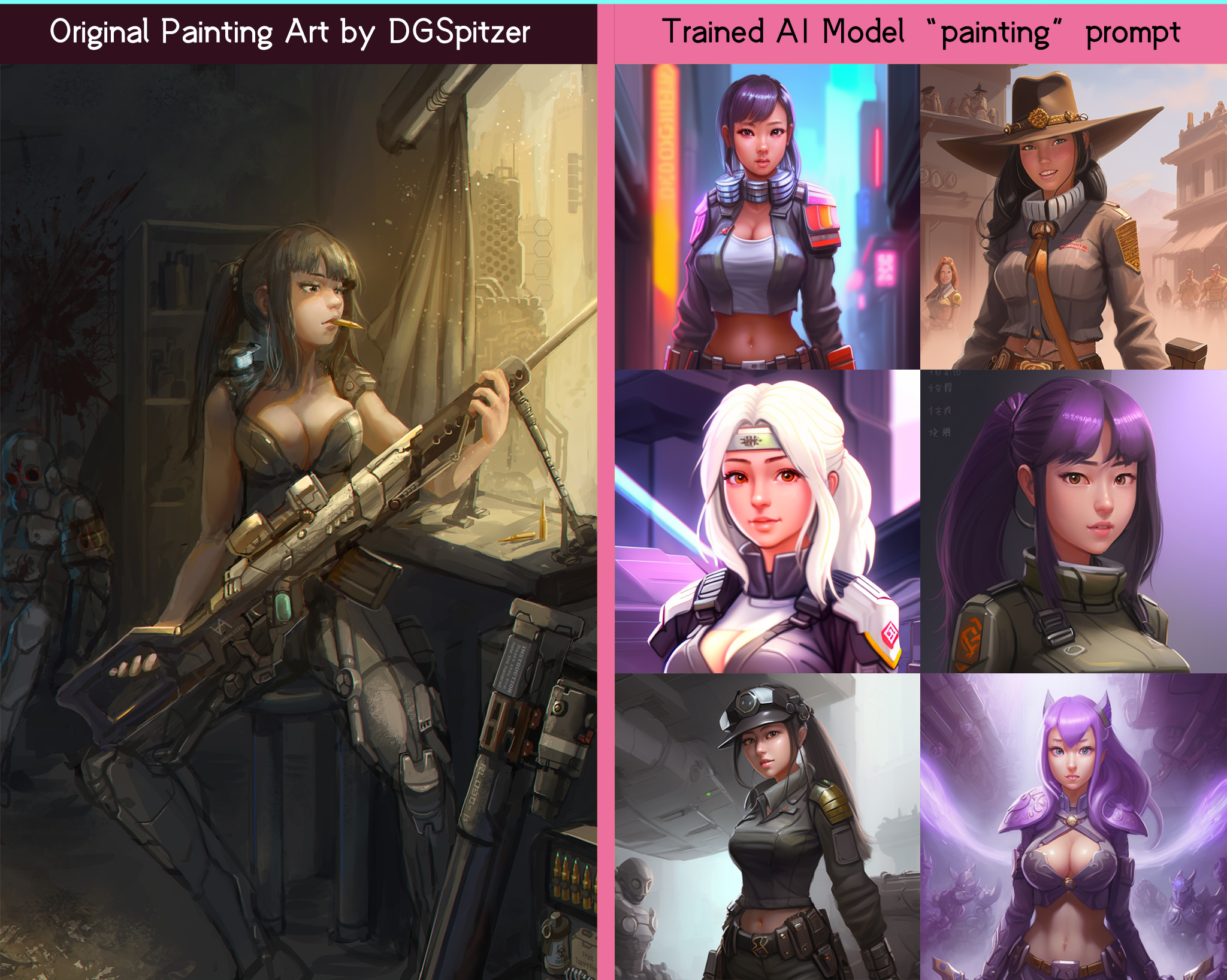

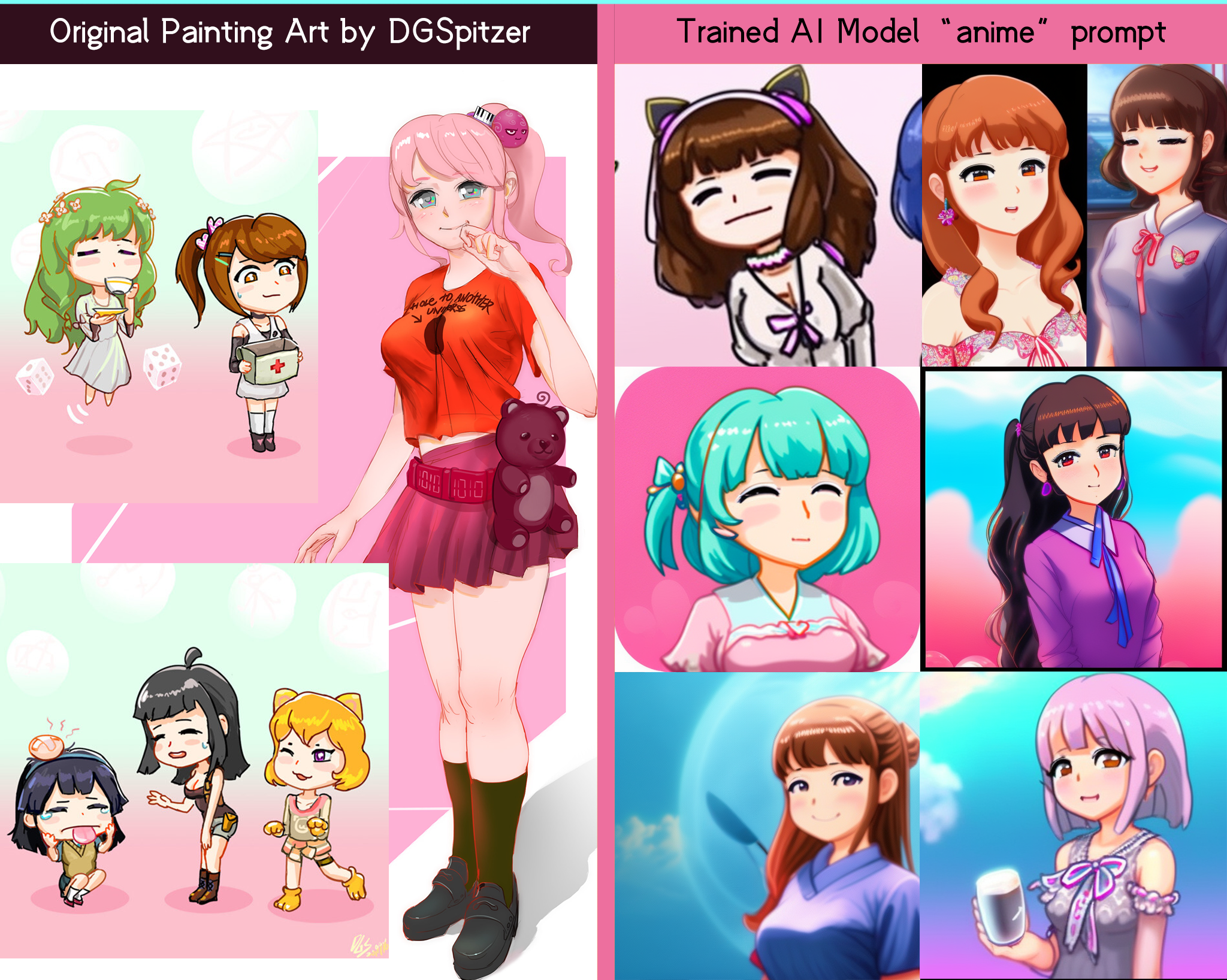

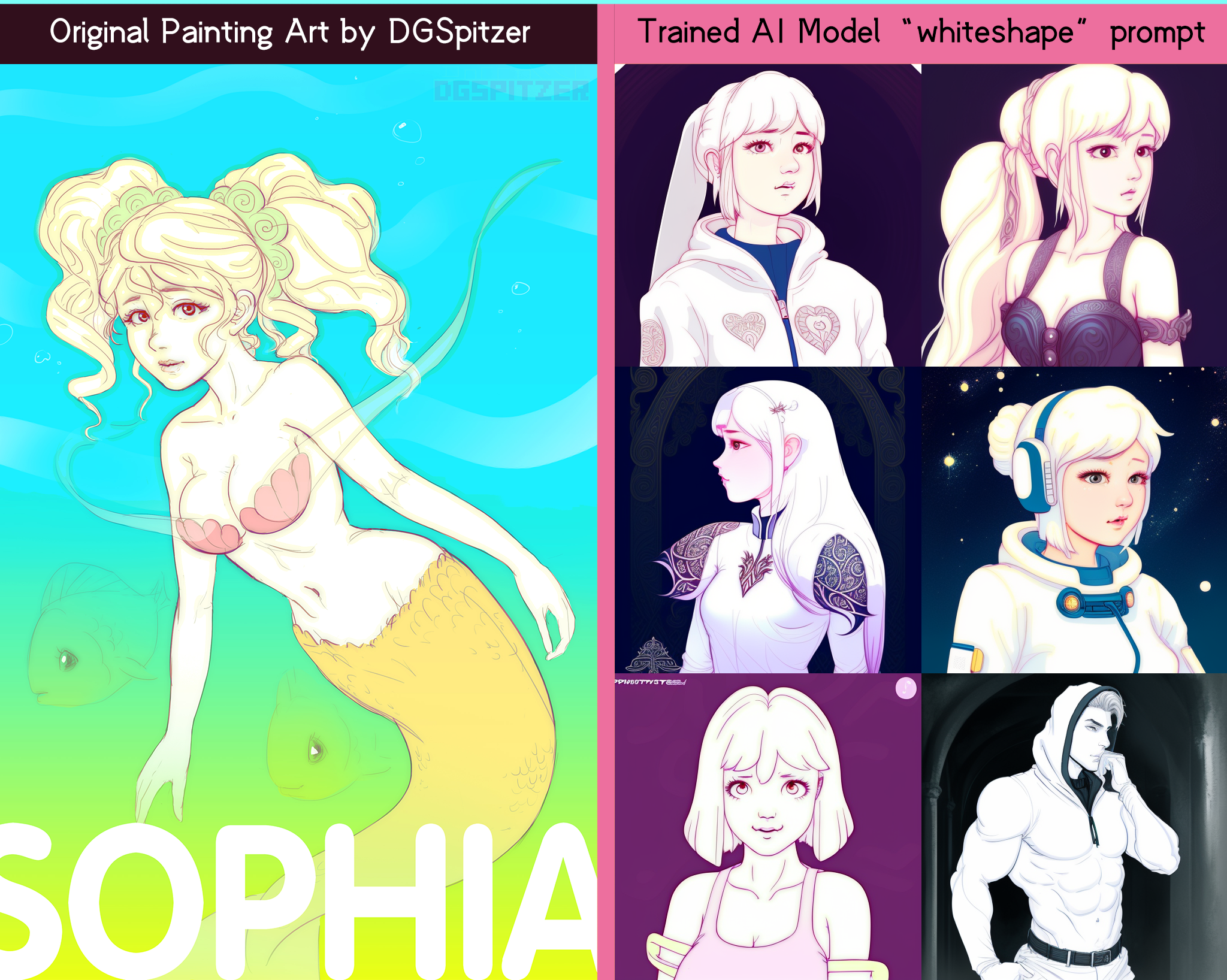

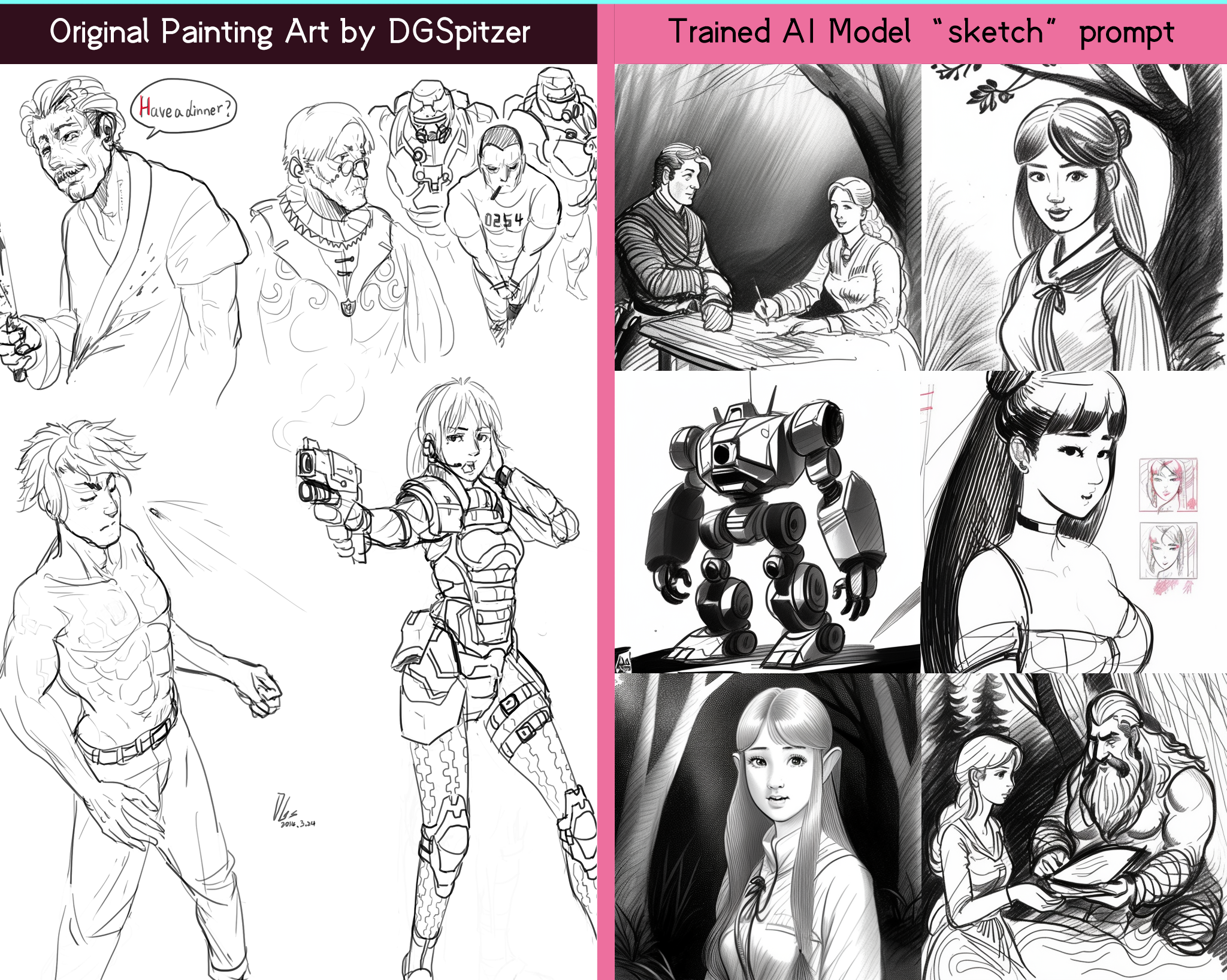

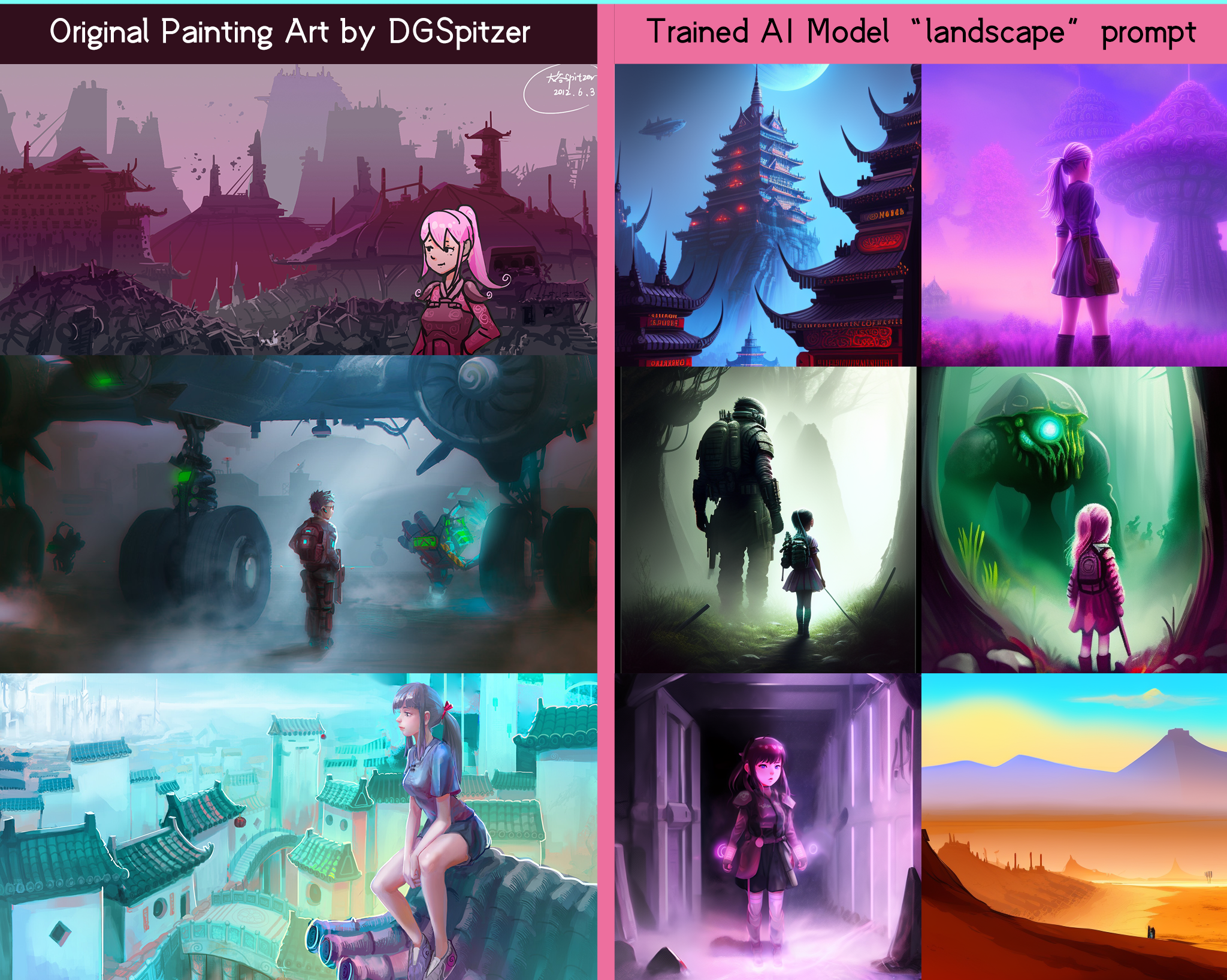

DGSpitzer Art Diffusion is **a unique AI model that is trained to draw like my art style!**

Hello folks! This is DGSpitzer, I'm a game developer, music composer and digital artist based in New York, you can check out my artworks/games/etc at here:

[Games](https://helixngc7293.itch.io/)

[Arts](https://www.artstation.com/helixngc7293)

[Music](https://soundcloud.com/dgspitzer)

I've been working on AI related stuff on my [Youtube Channel](https://www.youtube.com/channel/UCzzsYBF4qwtMwJaPJZ5SuPg)

since 2020 , especially for colorizing old black & white footage using a series of AI technologies.

I always portrait artificial intelligence as an assist tool for extending my creativity. Nevertheless, whatever how powerful AI will be, us humans will be the leading role during the creative process as usual, and AI will provide suggestions / generate drafts as a helper.

In my opinion, there is huge potential to adapt AI into the modern-age digital artist's work pipeline, therefore I trained this AI model with my art style as an experiment to try it out! And **share it with you for free!**

All of the datasets (as the training images for building this AI model) is from my own digital paintings & game concept arts, no other artists’ names involved. I separated my artworks into detailed categories such as “outline”, “sketch”, “anime”, “landscape”. As a result, this AI model supports multiple keywords in prompt as different styles of mine!

The model is fine-tuned based on [Vintedois diffusion-v0-1 Model](https://huggingface.co/22h/vintedois-diffusion-v0-1/) though, but I totally overwrite the style of the vintedois diffusion model by using “arts”, “paintings” as keywords with my dataset during Dreambooth training.

**You are free to use/fine-tune this model even commercially as long as you follow the [CreativeML Open RAIL-M license](LICENSE).** Additionally, **you can use all my artworks as dataset in your training**, giving a credit should be nice ;P

It’s welcome to share your results using this model! Looking forward to creating something truly amazing!

# DGSpitzer Art Diffusion

DGSpitzer Art Diffusion is **a unique AI model that is trained to draw like my art style!**

Hello folks! This is DGSpitzer, I'm a game developer, music composer and digital artist based in New York, you can check out my artworks/games/etc at here:

[Games](https://helixngc7293.itch.io/)

[Arts](https://www.artstation.com/helixngc7293)

[Music](https://soundcloud.com/dgspitzer)

I've been working on AI related stuff on my [Youtube Channel](https://www.youtube.com/channel/UCzzsYBF4qwtMwJaPJZ5SuPg)

since 2020 , especially for colorizing old black & white footage using a series of AI technologies.

I always portrait artificial intelligence as an assist tool for extending my creativity. Nevertheless, whatever how powerful AI will be, us humans will be the leading role during the creative process as usual, and AI will provide suggestions / generate drafts as a helper.

In my opinion, there is huge potential to adapt AI into the modern-age digital artist's work pipeline, therefore I trained this AI model with my art style as an experiment to try it out! And **share it with you for free!**

All of the datasets (as the training images for building this AI model) is from my own digital paintings & game concept arts, no other artists’ names involved. I separated my artworks into detailed categories such as “outline”, “sketch”, “anime”, “landscape”. As a result, this AI model supports multiple keywords in prompt as different styles of mine!

The model is fine-tuned based on [Vintedois diffusion-v0-1 Model](https://huggingface.co/22h/vintedois-diffusion-v0-1/) though, but I totally overwrite the style of the vintedois diffusion model by using “arts”, “paintings” as keywords with my dataset during Dreambooth training.

**You are free to use/fine-tune this model even commercially as long as you follow the [CreativeML Open RAIL-M license](LICENSE).** Additionally, **you can use all my artworks as dataset in your training**, giving a credit should be nice ;P

It’s welcome to share your results using this model! Looking forward to creating something truly amazing!

### 🧨 Diffusers

This repo contains both .ckpt and Diffuser model files. It's compatible to be used as any Stable Diffusion model, using standard [Stable Diffusion Pipelines](https://huggingface.co/docs/diffusers/api/pipelines/stable_diffusion).

You can convert this model to [ONNX](https://huggingface.co/docs/diffusers/optimization/onnx), [MPS](https://huggingface.co/docs/diffusers/optimization/mps) and/or [FLAX/JAX](https://huggingface.co/blog/stable_diffusion_jax).

```python example for loading the Diffuser

#!pip install diffusers transformers scipy torch

from diffusers import StableDiffusionPipeline

import torch

model_id = "DGSpitzer/DGSpitzer-Art-Diffusion"

pipe = StableDiffusionPipeline.from_pretrained(model_id, torch_dtype=torch.float16)

pipe = pipe.to("cuda")

prompt = "dgspitzer painting of a beautiful mech girl, 4k, photorealistic"

image = pipe(prompt).images[0]

image.save("./dgspitzer_ai_art.png")

```

# Online Demo

You can try the Online Web UI demo build with [Gradio](https://github.com/gradio-app/gradio), or use Colab Notebook at here:

*My Online Space Demo*

[](https://huggingface.co/spaces/DGSpitzer/DGS-Diffusion-Space)

*Buy me a coffee if you like this project ;P ♥*

[](https://www.buymeacoffee.com/dgspitzer)

# **👇Model👇**

AI Model Weights available at huggingface: https://huggingface.co/DGSpitzer/DGSpitzer-Art-Diffusion

# Usage

After model loaded, use keyword **dgs** in your prompt, with **illustration style** to get even better results.

For sampler, use **Euler A** for the best result (**DDIM** kinda works too), CFG Scale 7, steps 20 should be fine

**Example 1:**

```

portrait of a girl in dgs illustration style, Anime girl, female soldier working in a cyberpunk city, cleavage, ((perfect femine face)), intricate, 8k, highly detailed, shy, digital painting, intense, sharp focus

```

For cyber robot male character, you can add **muscular male** to improve the output.

**Example 2:**

```

a photo of muscular beard soldier male in dgs illustration style, half-body, holding robot arms, strong chest

```

**Example 3 (with Stable Diffusion WebUI):**

If using [AUTOMATIC1111's Stable Diffusion WebUI](https://github.com/AUTOMATIC1111/stable-diffusion-webui)

You can simply use this as **prompt** with **Euler A** Sampler, CFG Scale 7, steps 20, 704 x 704px output res:

```

an anime girl in dgs illustration style

```

And set the **negative prompt** as this to get cleaner face:

```

out of focus, scary, creepy, evil, disfigured, missing limbs, ugly, gross, missing fingers

```

---

**NOTE: usage of this model implies accpetance of stable diffusion's [CreativeML Open RAIL-M license](LICENSE)**

---

### 🧨 Diffusers

This repo contains both .ckpt and Diffuser model files. It's compatible to be used as any Stable Diffusion model, using standard [Stable Diffusion Pipelines](https://huggingface.co/docs/diffusers/api/pipelines/stable_diffusion).

You can convert this model to [ONNX](https://huggingface.co/docs/diffusers/optimization/onnx), [MPS](https://huggingface.co/docs/diffusers/optimization/mps) and/or [FLAX/JAX](https://huggingface.co/blog/stable_diffusion_jax).

```python example for loading the Diffuser

#!pip install diffusers transformers scipy torch

from diffusers import StableDiffusionPipeline

import torch

model_id = "DGSpitzer/DGSpitzer-Art-Diffusion"

pipe = StableDiffusionPipeline.from_pretrained(model_id, torch_dtype=torch.float16)

pipe = pipe.to("cuda")

prompt = "dgspitzer painting of a beautiful mech girl, 4k, photorealistic"

image = pipe(prompt).images[0]

image.save("./dgspitzer_ai_art.png")

```

# Online Demo

You can try the Online Web UI demo build with [Gradio](https://github.com/gradio-app/gradio), or use Colab Notebook at here:

*My Online Space Demo*

[](https://huggingface.co/spaces/DGSpitzer/DGS-Diffusion-Space)

*Buy me a coffee if you like this project ;P ♥*

[](https://www.buymeacoffee.com/dgspitzer)

# **👇Model👇**

AI Model Weights available at huggingface: https://huggingface.co/DGSpitzer/DGSpitzer-Art-Diffusion

# Usage

After model loaded, use keyword **dgs** in your prompt, with **illustration style** to get even better results.

For sampler, use **Euler A** for the best result (**DDIM** kinda works too), CFG Scale 7, steps 20 should be fine

**Example 1:**

```

portrait of a girl in dgs illustration style, Anime girl, female soldier working in a cyberpunk city, cleavage, ((perfect femine face)), intricate, 8k, highly detailed, shy, digital painting, intense, sharp focus

```

For cyber robot male character, you can add **muscular male** to improve the output.

**Example 2:**

```

a photo of muscular beard soldier male in dgs illustration style, half-body, holding robot arms, strong chest

```

**Example 3 (with Stable Diffusion WebUI):**

If using [AUTOMATIC1111's Stable Diffusion WebUI](https://github.com/AUTOMATIC1111/stable-diffusion-webui)

You can simply use this as **prompt** with **Euler A** Sampler, CFG Scale 7, steps 20, 704 x 704px output res:

```

an anime girl in dgs illustration style

```

And set the **negative prompt** as this to get cleaner face:

```

out of focus, scary, creepy, evil, disfigured, missing limbs, ugly, gross, missing fingers

```

---

**NOTE: usage of this model implies accpetance of stable diffusion's [CreativeML Open RAIL-M license](LICENSE)**

---