A woman in a costume standing in the desert.

A woman wearing a blue jacket and scarf.

A woman in a costume standing in the desert.

A woman wearing a blue jacket and scarf.

A young woman in a blue dress performing on stage.

A woman with black hair and a striped shirt.

A woman with white hair and white armor is holding a sword.

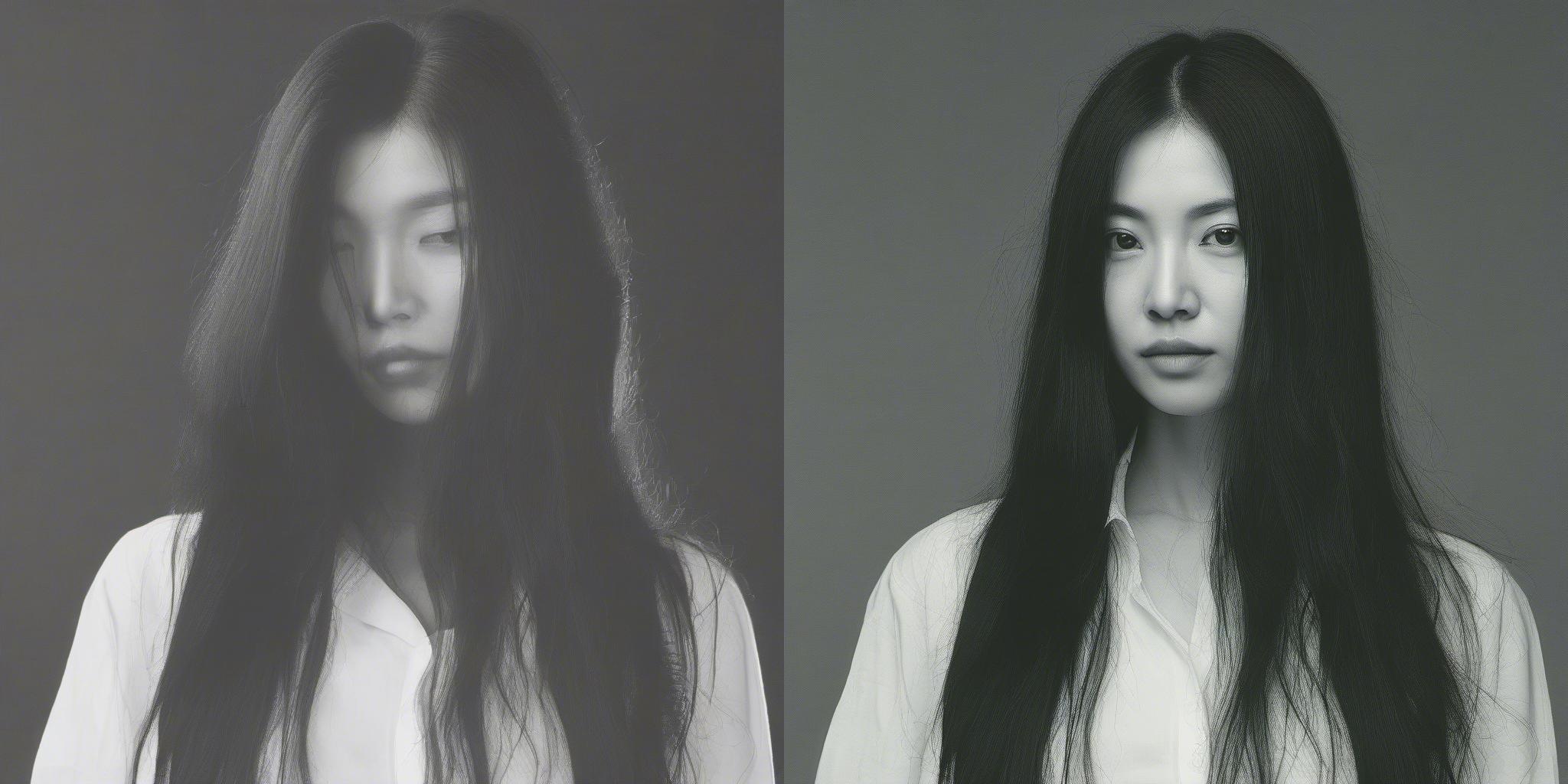

A woman with long black hair and a white shirt.